Here’s A Quick Way To Solve A Tips About How To Deal With Multicollinearity

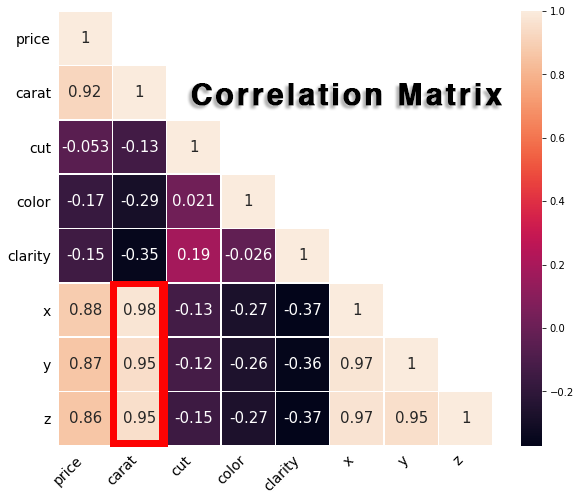

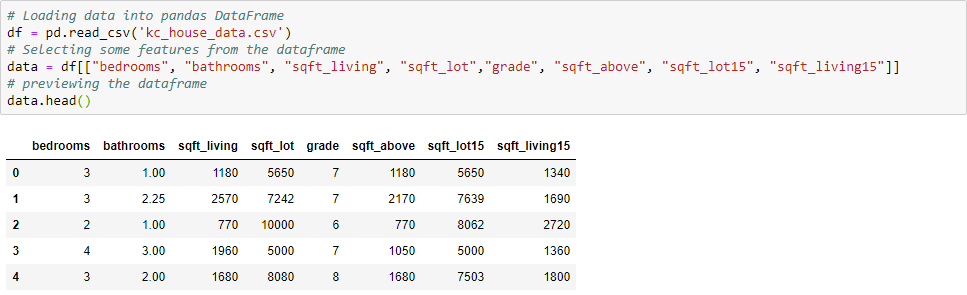

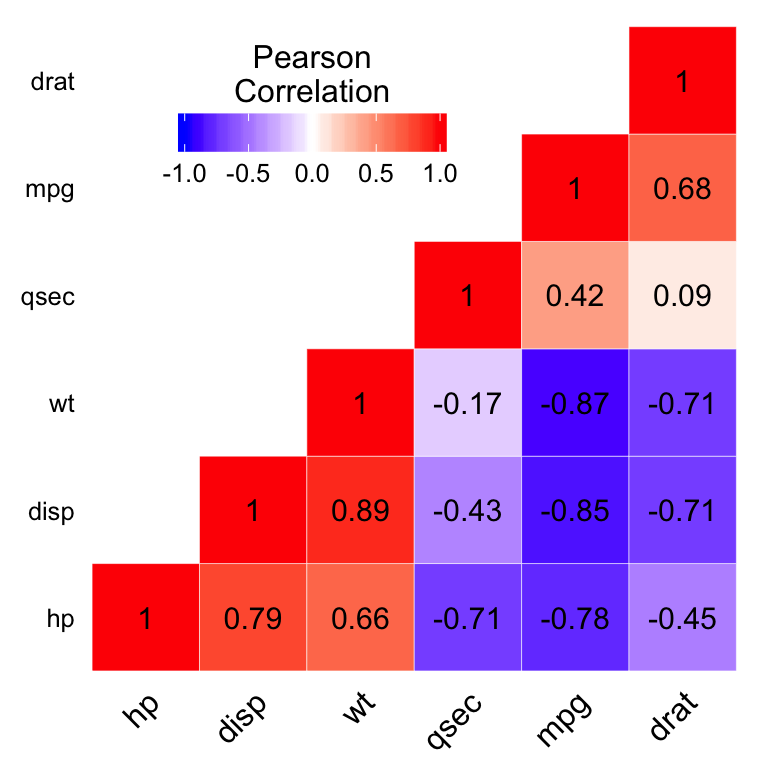

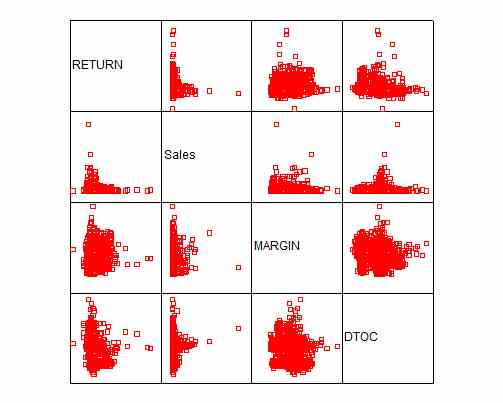

By calculating the correlation coefficient between pairs of predictive features, you can identify features that may be contributing to multicollinearity.

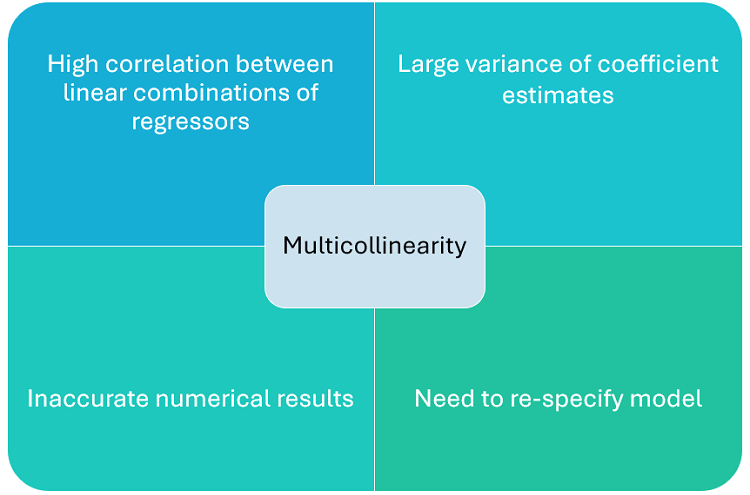

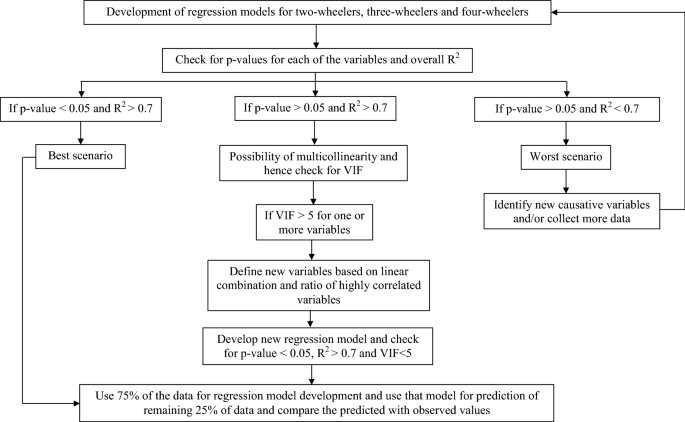

How to deal with multicollinearity. Because the various x variables interact with one another, regression. Ridge regression can also be used when data is highly collinear. If you detect multicollinearity, the next step is to decide if you need to resolve it in some way.

How to identify multicollinearity you may start by looking at scatterplots among predictors for an initial concept about how the predictors relate. As multicollinearity only affects the regression coefficients and not the actual predictions, it is. Standardization does not affect the correlation.

When some of the predictor variables are too similar or too closely correlated with one another, it makes it very difficult to sort out their separate effects. Let me start with a fallacy. In general, there are 3 options to handle multicollinearity:

A rule of thumb is that if vif > 10 then multicollinearity is high (a cutoff of 5 is also commonly used). One way to address multicollinearity is to center the predictors, that is substract the mean of one series from each value. In this blog, we will examine how to identify and address multicollinearity.

To reduce multicollinearity we can use regularization that means to. How to deal with multicollinearity often the easiest way to deal with multicollinearity is to simply remove one of the problematic variables since the variable you’re. Some suggest that standardization helps with multicollinearity.